bagging predictors. machine learning

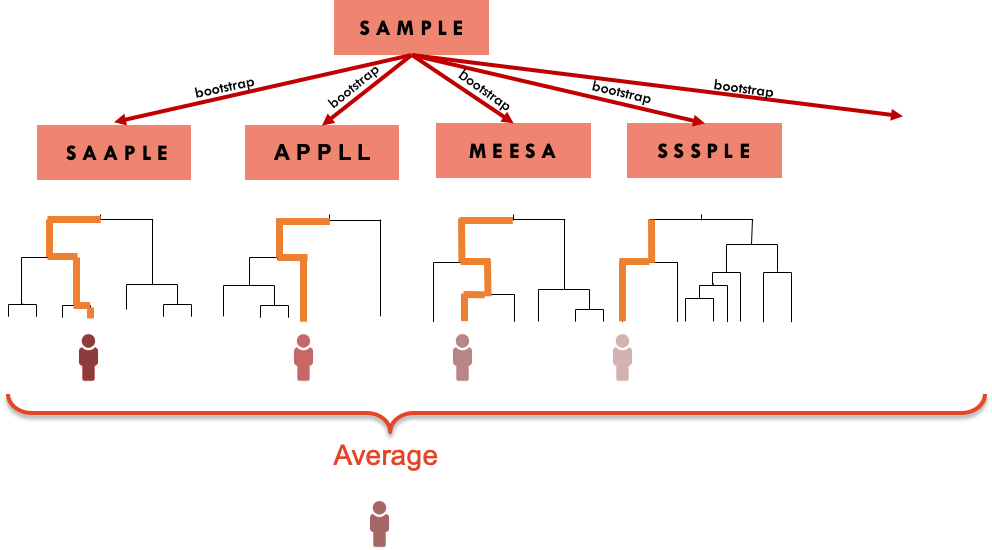

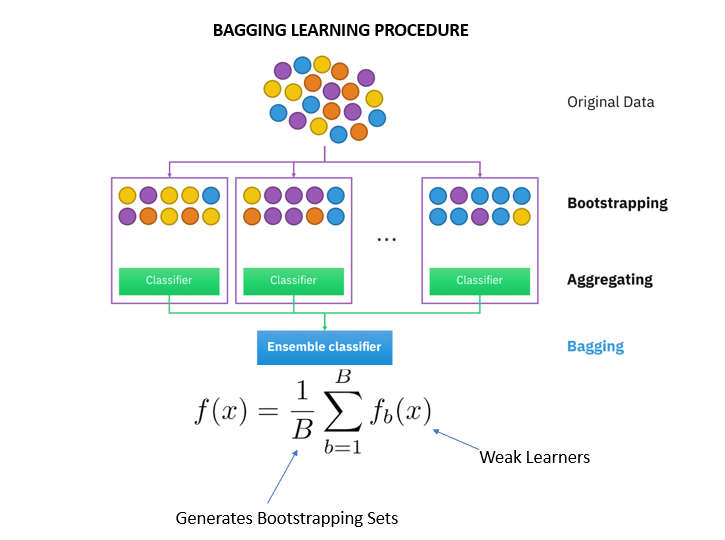

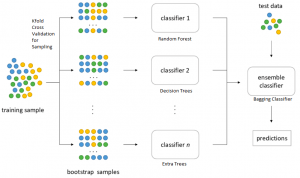

By clicking downloada new tab will open to start the export process. Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regressionIt also reduces variance and helps to avoid overfittingAlthough it is usually applied to decision tree methods it can be used with any.

Chapter 10 Bagging Hands On Machine Learning With R

Bagging Predictors LEO BREIMAN leostatberkeleyedu Statistics Department University of California Berkeley CA 94720.

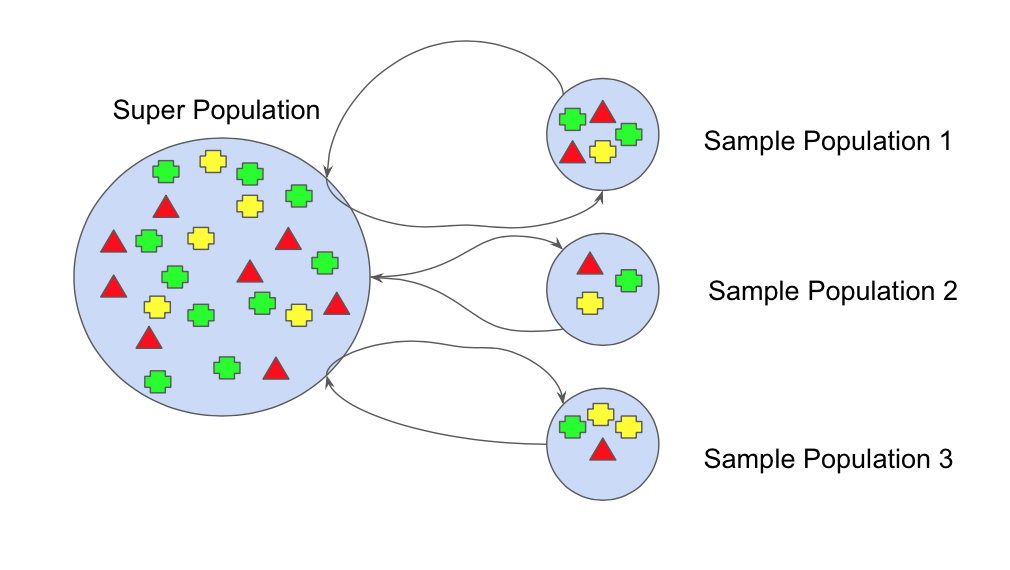

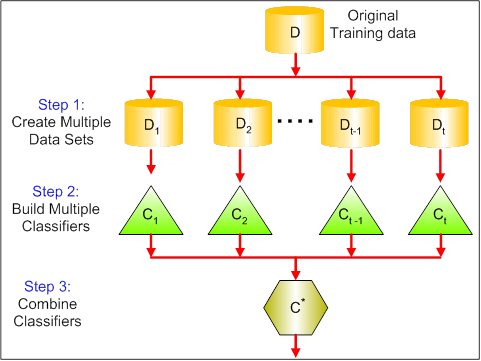

. For a subsampling fraction of approximately 05 Subagging achieves nearly the same prediction performance as Bagging while coming at a lower computational cost. Bagging and pasting. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

Bagging Predictors o e L eiman Br 1 t Departmen of Statistics y ersit Univ of California at eley Berk Abstract Bagging predictors is a metho d for generating ultiple m ersions v of a pre-dictor and using these to get an aggregated predictor. Up to 10 cash back Breiman L 1996 Bagging predictors. Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston.

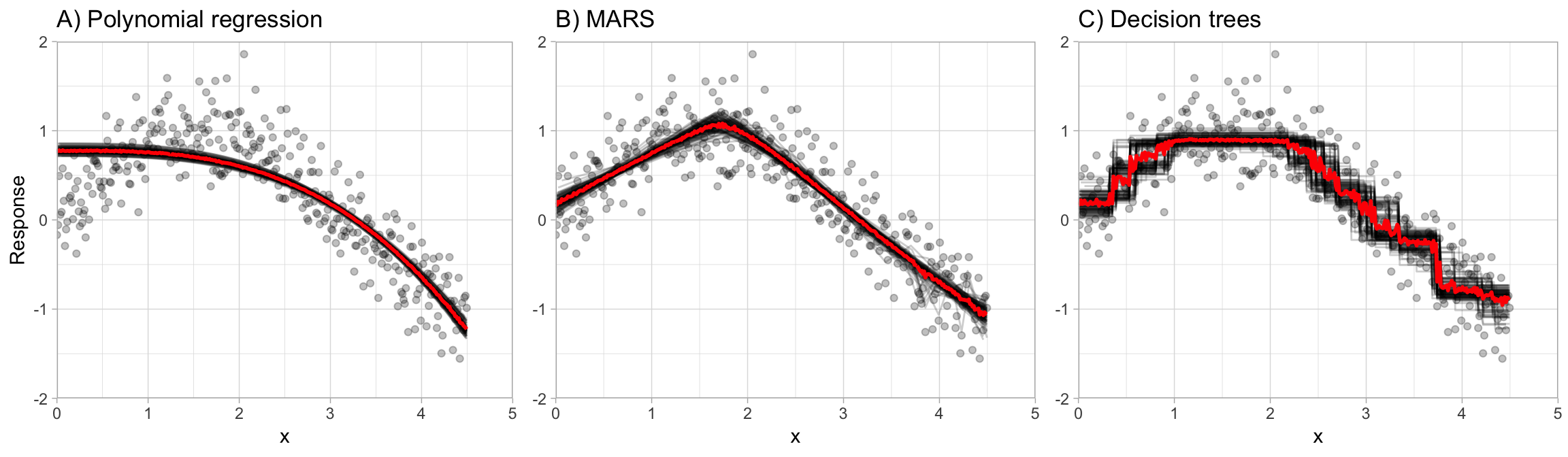

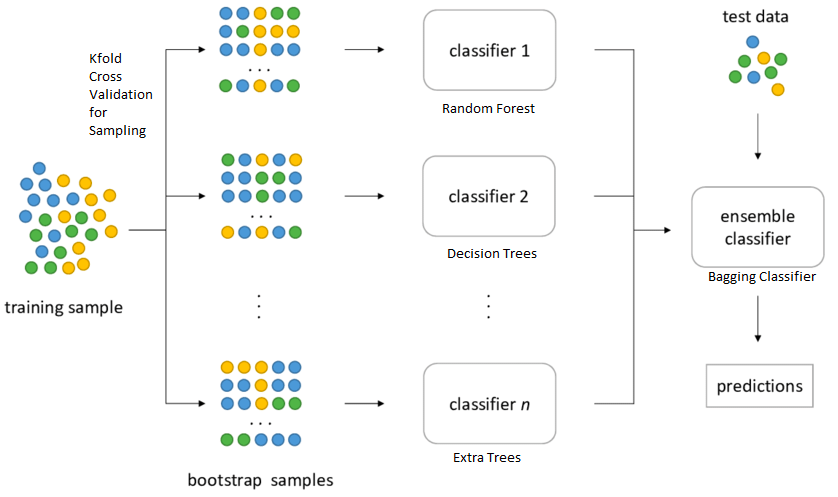

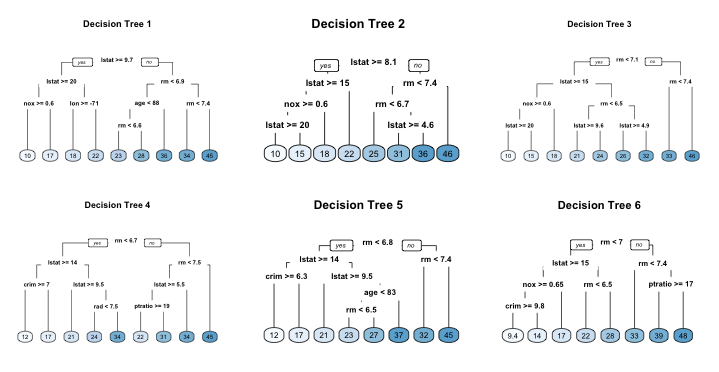

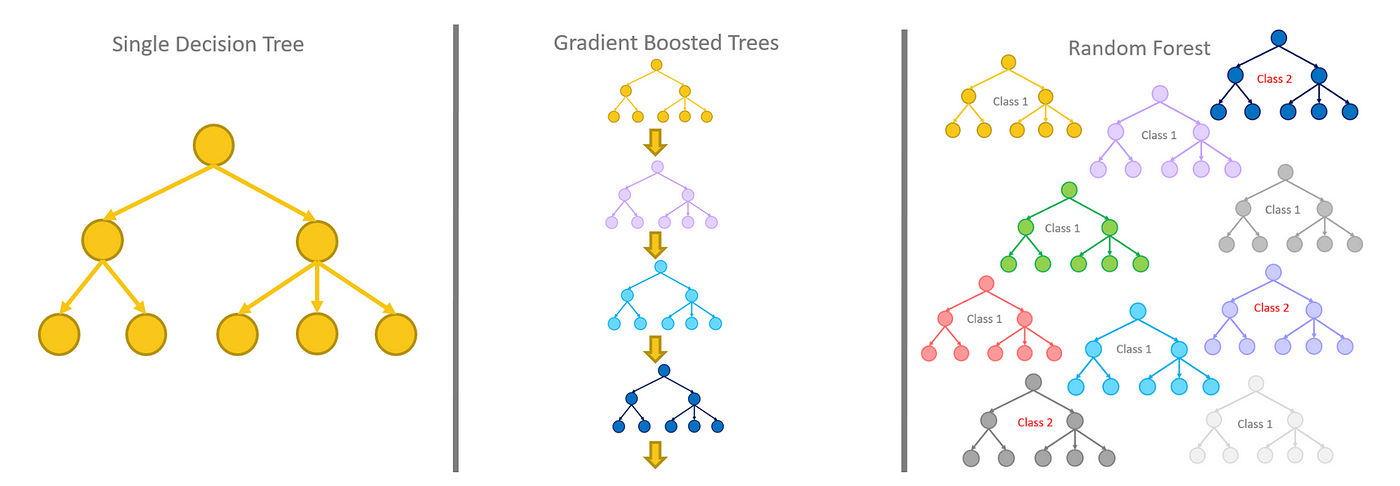

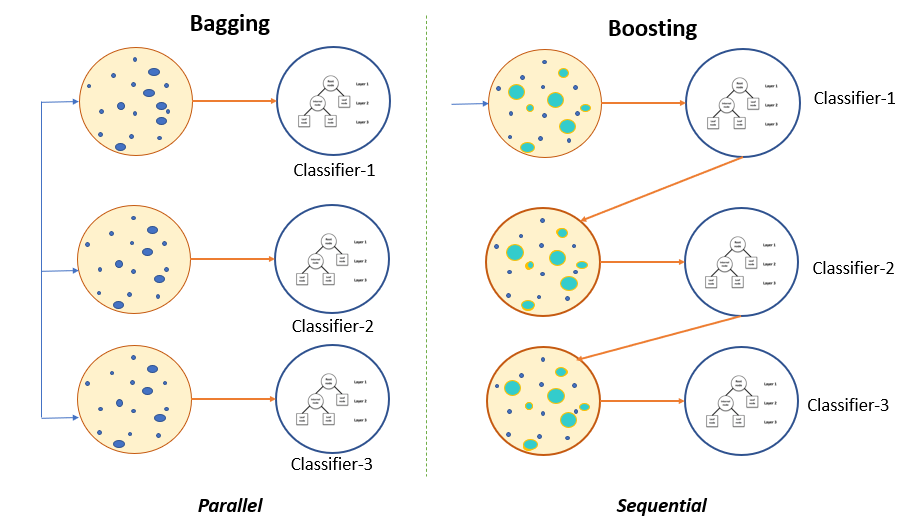

Bagging and boosting are two main types of ensemble learning methods. Improving nonparametric regression methods by bagging and boosting. Other high-variance machine learning algorithms can be used such as a k-nearest neighbors algorithm with a low k value although decision trees have proven to be the most effective.

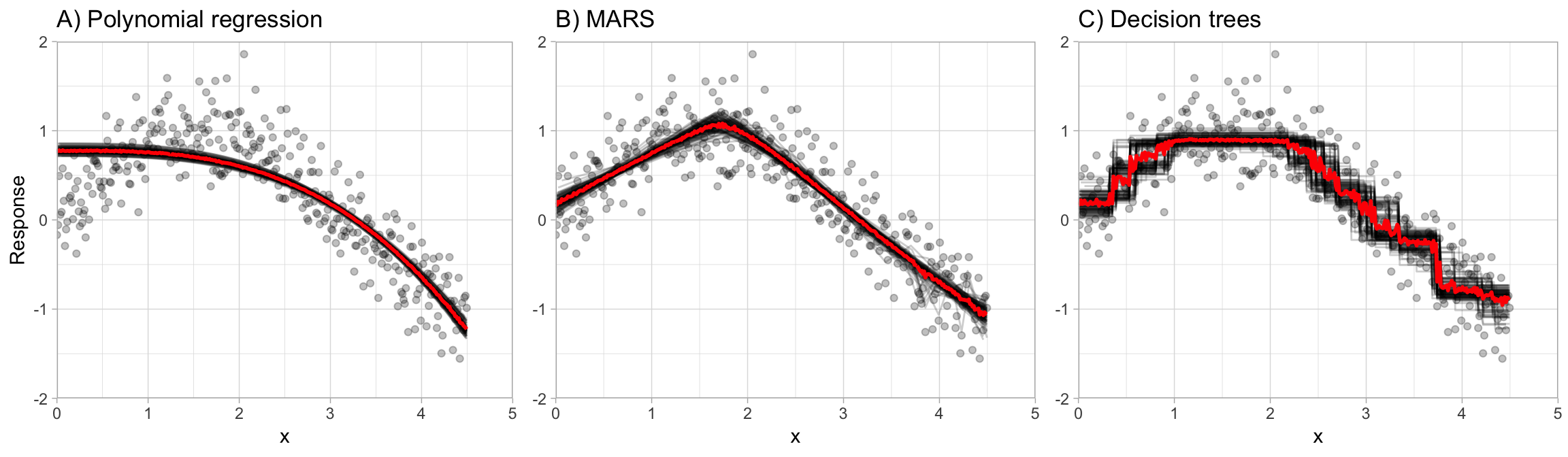

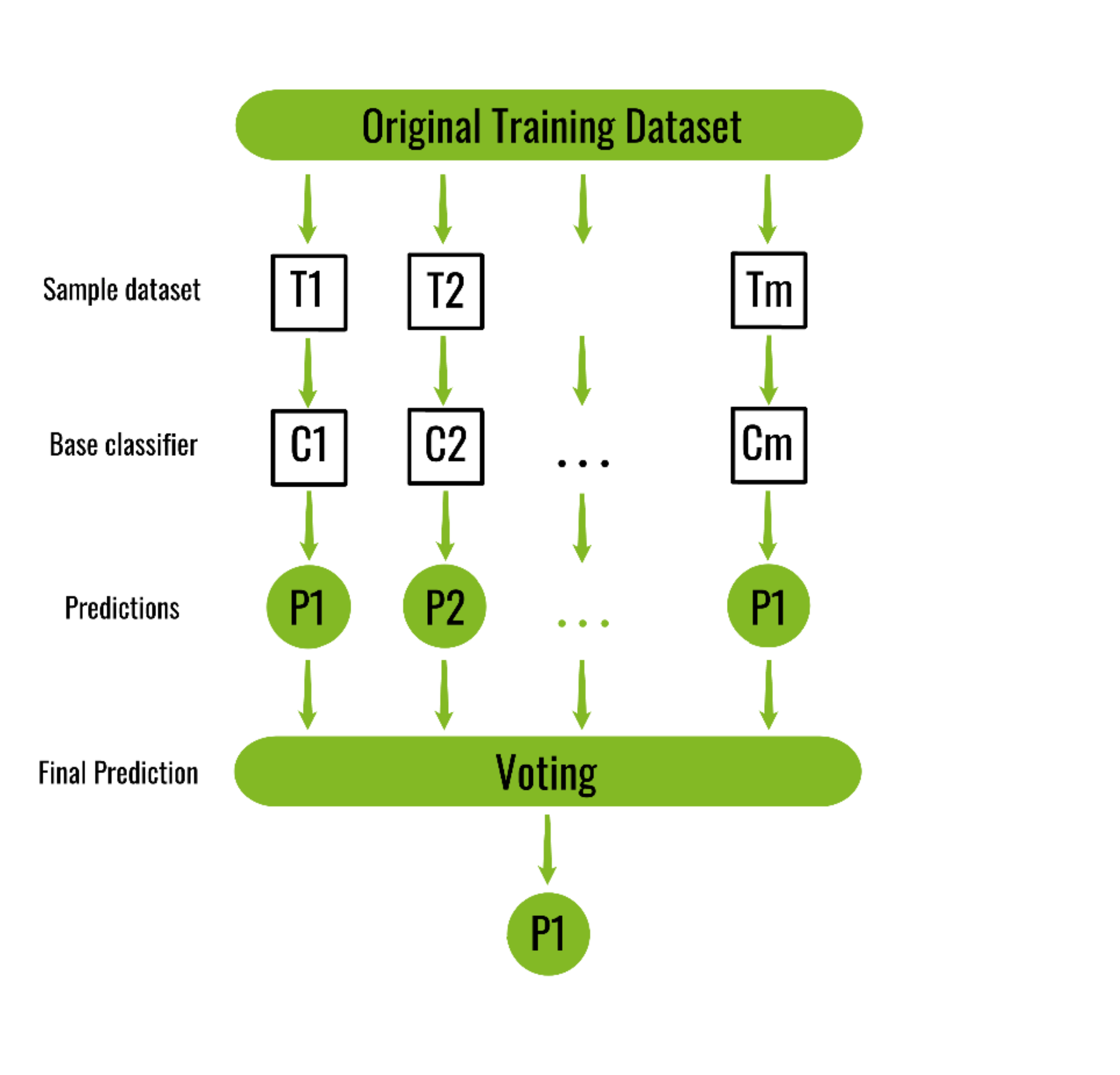

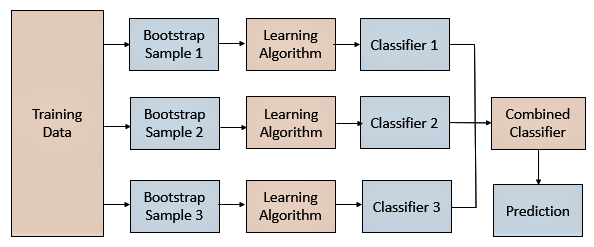

The process may takea few minutes but once it finishes a file will be downloaded on your browser soplease do not close the new tab. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. The multiple versions are formed by making bootstrap replicates of the learning set and.

Machine learning ML techniques use ensemble techniques to improve recognition and prediction reliability. A Case Study in Venusian Volcano Detection. The results of repeated tenfold cross-validation experiments for predicting the QLS and GAF functional outcome of schizophrenia with clinical symptom scales using machine learning predictors such as the bagging ensemble model with feature selection the bagging ensemble model MFNNs SVM linear regression and random forests.

Computational Statistics and Data Analysis. Berkele CA 94720 leostatberkeleyedu Editor. Breiman Bagging predictors Machine Learning Vol.

These methods frequently help to alleviate the over-fitting problem by integrating and aggregating numerous weak learners. Results 1 - 10 of 14. Bagging Predictors By Leo Breiman Technical Report No.

Bagging predictors 1996. If perturbing the learning set can cause significant changes in the predictor constructed then bagging can improve accuracy. Brown-bagging Granny Smith apples on trees stops codling moth damage.

Cited by 11 259year BREIMAN L 1996. Machine Learning 24 123140 1996. 421 September 1994 Partially supported by NSF grant DMS-9212419 Department of Statistics University of California Berkeley California 94720.

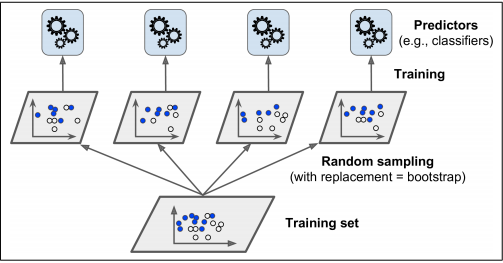

Bagging short for bootstrap aggregating creates a dataset by sampling the training set with replacement. Bagging and pasting are techniques that are used in order to create varied subsets of the training data. Article Google Scholar Chang FJ Chen YC 2001 A counterpropagation fuzzy-neural network modeling approach to real time streamflow prediction.

The subsets produced by these techniques are then used to train the predictors of an ensemble. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. Bagging Predictors LEO BBEIMAN Statistics Department University qf Callbrnia.

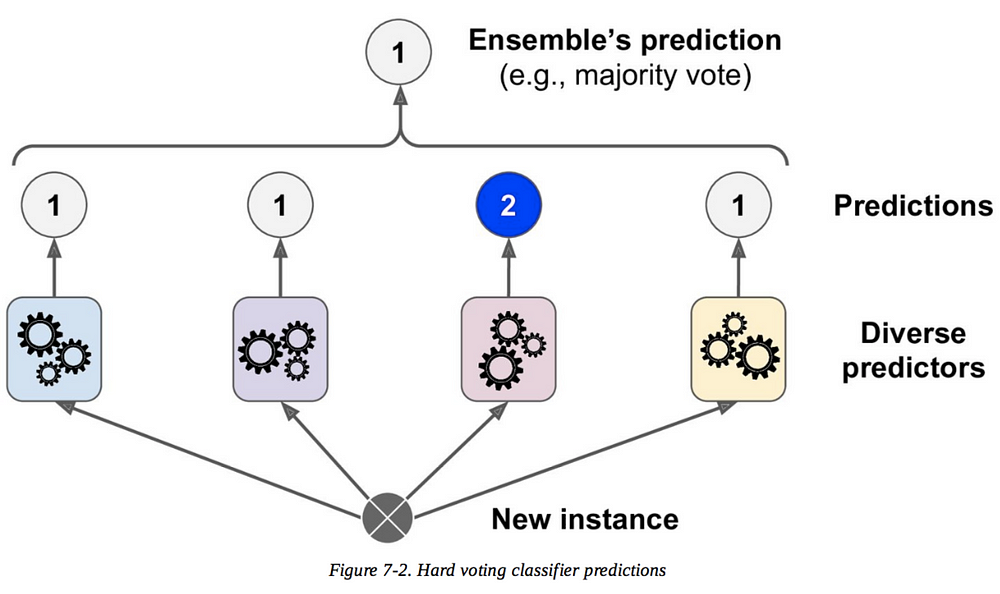

In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling. Bagging And Boosting Ensembles. Visual showing how training instances are sampled for a predictor in bagging ensemble learning.

Manufactured in The Netherlands. Random Forest is one of the most popular and most powerful machine learning algorithms. Google Scholar Breiman L 2001 Random forests.

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. In bagging weak learners are trained in parallel but in boosting they learn sequentially. In the above example training set has 7.

We see that both the Bagged and Subagged predictor outperform a single tree in terms of MSPE. Bagging avoids overfitting of data and is used for both regression and classification. Bagging also known as Bootstrap aggregating is an ensemble learning technique that helps to improve the performance and accuracy of machine learning algorithms.

Next 10 Feature Engineering and Classifier Selection. The Below mentioned Tutorial will help to Understand the detailed information about bagging techniques in machine learning so Just Follow All the Tutorials of Indias Leading Best Data Science Training institute in Bangalore and Be a. Bootstrap aggregating also called bagging is one of the first ensemble algorithms.

Machine learning 242123140 1996 by L Breiman Add To MetaCart. It is used to deal with bias-variance trade-offs and reduces the variance of a prediction model. After reading this post you will know about.

In Section 242 we learned about bootstrapping as a resampling procedure which creates b new bootstrap samples by drawing samples with replacement of the original training data. In customer relationship management it is important for e-commerce businesses to attract new customers and retain existing ones. The aggregation v- a erages er v o the ersions v when predicting a umerical n outcome and do es y.

This paper proposes a churn prediction model based on. This chapter illustrates how we can use bootstrapping to create an ensemble of predictions. Research on customer churn prediction using AI technology is now a major part of e-commerce management.

As highlighted in this study PDF 248 KB link resides outside IBM the main difference between these learning methods is the way in which they are trained.

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Bagging And Pasting In Machine Learning Data Science Python

2 Bagging Machine Learning For Biostatistics

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Ensemble Learning 5 Main Approaches Kdnuggets

The Concept Of Bagging 34 Download Scientific Diagram

Bootstrap Aggregating Wikiwand

Illustrations Of A Bagging And B Boosting Ensemble Algorithms Download Scientific Diagram

Ml Bagging Classifier Geeksforgeeks

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium

Bagging Classifier Python Code Example Data Analytics

Chapter 10 Bagging Hands On Machine Learning With R

Ensemble Models Bagging Boosting By Rosaria Silipo Analytics Vidhya Medium

Tree Based Algorithms Implementation In Python R

Bagging Classifier Python Code Example Data Analytics

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging Bootstrap Aggregation Overview How It Works Advantages

How To Use Bagging Technique For Ensemble Algorithms A Code Exercise On Decision Trees By Rohit Madan Analytics Vidhya Medium

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium